In our last discussion we discussed the so-called ‘rung two’ of the ladder of causation, discussing interventions and randomisation in control trials. This is an incredibly important field in the design of experiments. We now take the next step, reaching counterfactual reasoning. Consider for a second how powerful the ability to ask “what if?” really is.

This Series

- A Causal Perspective

- Causal Models

- Learning Causal Models

- Causality and Machine Learning

- Interventions and Multivariate SCMs

- Reaching Rung 3: Counterfactual Reasoning

- Faithfulness

- The Do Calculus

Counterfactuals

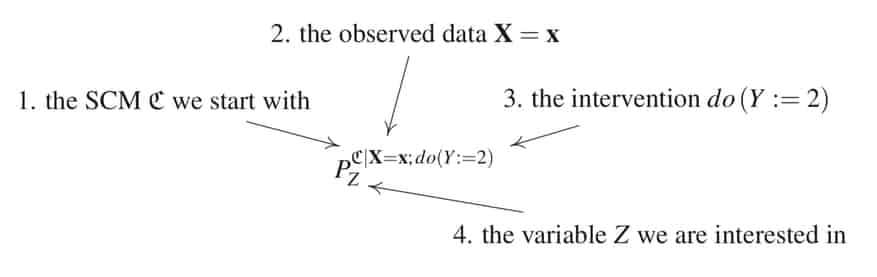

Counterfactuals: Consider some SCM \( C = (S, P_N) \) with vertices \( X \). We define a counterfactual SCM by replacing the distribution of noise variables: \[ C_{X=x} = (S, P_N^{C \mid X=x }), \] where \(x\) is an observation and \( P_N^{C \mid X=x } = P_{N \mid X=x}. \)

Peters points out that the new set of noise variables need not be jointly independent. We can thus view counterfactual statements as do-statements in the new (counterfactual) SCM. Further, we can generalise such that only some of the variables of \( X \) are observed. Consider the counterfactual statement \[ P_Z^{C \mid X=x; do(X=2)}. \] This can be interpreted as asking the question “what would \(Z\) be had we set \(X\) to 2?”

It is very important to note that different SCMs can be both probabilistically and interventionally equivalent, while still not being _counterfactually equivalent. In fact, Peters presents two different SCMs which generate the same causal graph model while being counterfactually different. This has some important implications:

- Causal graphical models do not present enough information to predict counterfactuals.

- Counterfactual statements require additional assumptions to distinguish between “similar” SCMs which are counterfactually different.

It is also easy to construct an example which proves that counterfactual statements are not transitive. In other words, knowing “\( Y \) would have been \( y \), had \( X \) been \( x \)” and “\( Z \) would have been \( z \), had \( Y \) been \( y \)” does not necessarily imply “\( Z \) would have been \( z \), had \( X \) been \( x \).”

An interesting point to consider is counterfactual statements have no correspondence with the real world. Interventional statements, on the other hand, correspond well with randomised controlling of variable - in RCTs for example. The question of whether counterfactual statements are falsifiable are also important to consider, especially in the context of scientific enquiry and judicial procedings for example.

Markov Properties

We are ready to formalise some important statistical properties of causal models.

Markov property: Given a DAG \( G \) and joint distribution \( P_X \), this distribution is said to satisfy:

- Global Markov property with respect to the DAG if \[ A \perp_G B \mid C \implies A \perp B \mid C \]for disjoint sets \(A, B, C \), where \( \perp_G \) denotes d-seperation and \( \perp \) indicates independence.

- Local Markov property with respect to the DAG if each variable is independent of its non-descendants given its parents.

- Markov factorisation property with respect to the DAG if \[ p(x) = p(x_1,\dots,x_d) = \prod_{j=1}^d p(x_j \mid PA_j^G). \] Here \( p(x_j \mid PA_j^G) \) are the causal Markov kernels.

These conditions are equivalent given the joint distribution has a density with respect to a product measure.

Markov equivalence of graphs: Let \( \mathcal{M}(G) \) denote the set of Markovian distributions with respect to G: \[ \mathcal{M}(G) = \{ P \mid P \text{ satisfies global or local Markov property w.r.t. } G \}. \] Then two DAGs \(G_1 \) and \( G_2 \) are said to be Markov equivalent if \( \mathcal{M}(G_1) = \mathcal{M}(G_2). \) This property ensures two graphs entail the same set of independence conditions, as we defined earlier. From this defition the following graphical criteria for the condition has been developed.

Graphical criteria for Markov equivalence: Two DAGs are Markov equivalents if and only if they have the same skeleton and the same immoralities (v-structure).

Markov blanket: Consider DAG \( G = (V, E) \) and target vertex \( Y \). The Markov blaket of \( Y \) is the smallest set \( M \) such that \[ Y \perp_G V \setminus (\{ Y \} \cup M) \quad \text{given } M.\] If \(P_X \) is Markovian w.r.t. \( G \), then \[ Y \perp V \setminus (\{ Y \} \cup M) \quad \text{given } M.\]

The Markov blanket of Y contains its parents, children and the parents of its children. That is, \[ M = PA_Y \cup CH_Y \cup PA_{CH_Y}. \]

SCMs imply Markov property: Assume \( P_X \) is induced by an SCM with graph \( G \). Then \( P_X \) is Markovian w.r.t. \( G \).

Next up

This has been a pretty definition heavy discussion, but hopefully it get’s you thinking about the different conditions we need to take into account to formualte a rigorous formulation of causality. Next time we’ll start by discussing causal graphical models and faithfulness.

Resources

This series of articles is largely based on the great work by Jonas Peters, among others:

St John

St John